White Paper: Virtualization

Server Virtualization and Monitoring

Executive Summary

Server virtualization has proven itself time and time again as the biggest benefit to virtualization in the datacenter. Despite its ever-decreasing limits, where server virtualization has been applied rigorously, it has both enormous and positive impacts on corporate power and cooling expenses as well as data center capacity. It can extend the lives of aging data centers and even allow some large organizations to shut down some of their data centers.

Virtualization Today

Current technology allows multiple virtual hosts to share a single operating system license. This can get to the point where 50-100 virtual servers run on a single physical box which can manage high-compute load applications that would have been considered poor candidates for virtualization five years ago. Virtualization works by freeing applications from the limited and rigid constraints of a single physical server. It allows multiple application hosts to run on one server, but equally important, it allows one application to use resources across the corporate network. One of the features of virtualization is the ability to allow applications or guests to dynamically move from one physical server to another as the demands and resource availabilities change, without service interruption. In addition, you can now also add resources like CPU, Memory, and Disk to a virtualized machine on-the-fly, also without service interruption.

Virtualization also mandates the use of storage-area networks (SANs) and detached storage. This drives the network further into the center of the IT infrastructure and architecture. From a security standpoint, centralizing multiple applications on a single box creates single points of failure, both in the physical server itself, and in its network connection. When a virtualized server crashes, or its network connection slows or breaks, it impacts all the applications on that box. However, there are many software tools available now that specialize in virtualized server high-availability. Ranging from incremental backups to full virtual server replication to a backup site that can be brought online with very low recovery time.

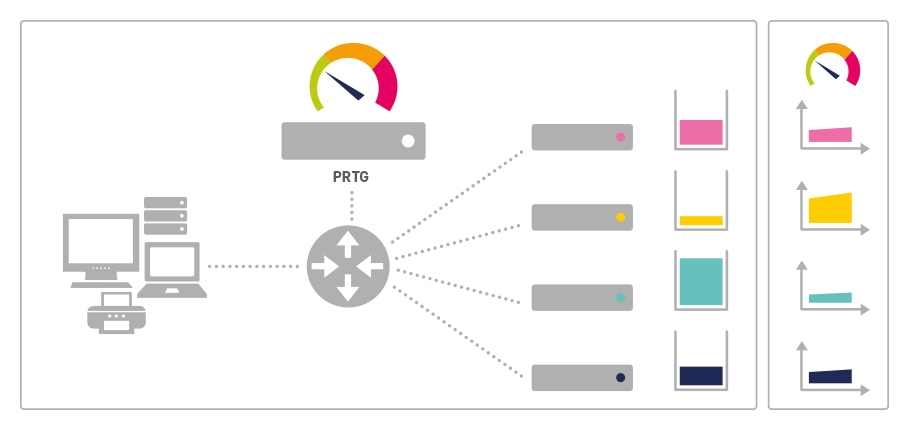

FIGURE: Each application resides

on a seperate physical

server with attached storage

Network’s Role for Virtualization

The implication for network planning is that a great deal more traffic will be centralized on a few large servers instead of spread out across a large number of smaller computers on the data center floor. Also, virtualization works best with detached, rather than attached storage. This requires very fast, dependable network connectivity between servers and the storage devices on the storage area network (SAN). Organizations moving from attached storage, as part of virtualization, will create a large increase in network traffic. Also, the mediums used for these networks can be costly when considering fibre-channel connections that allow well over 10Gbps of bandwidth.

All of this increases the need for strong network management.

A highly virtualized environment lives or dies on the efficiency and dependability of its data network. Failure of a physical server, connection, switch or router can be very costly when it disconnects knowledge workers, automated factory floors or online retail operations from vital IT functionality. Network management can provide vital information for planning and testing virtualized environments. For instance, determining which applications are not good candidates for virtualization is vital. The amount and character of network traffic can provide important clues towards identifying those applications.

Why Server Virtualization

Server virtualization allows for IT departments caught between the pressure to cut costs in the face of a developing worldwide recession, steadily increasing energy costs and maxed-out data centers to achieve those goals. Virtualization attacks the problem of the low utilization of single application servers. These proliferate in data centers of medium to very large enterprises. The server populations of many non-virtualized environments average about 20% utilization. The result is a huge waste of power, which is doubled since every kilowatt used must be balanced with an equal amount of cooling to maintain the servers at optimal operating temperature.

This also has grave implications for the lifespan of data centers as increasing numbers of facilities run short of power, cooling and, in some cases, floor space despite the move to blade servers over the last few years. This can also be a problem in the face of natural disasters that can cause flooding of facilities or reduce the ability to provide reliable backup power. Virtualization avoids this problem by automating the management issues of stacking multiple applications on a single server and sharing resources among them.

Saving $2.4 Million Energy Costs Per Year – The BT-Example

This allows IT shops to increase their server utilization up to 80%. The impact of this on a large organization is demonstrated by the experience of BT in the UK. It achieved a 15:1 consolidation of its 3,000 Wintel servers and saved approximately 2 megawatts of power and $2.4 million in annual energy costs. This helped to reduce server maintenance costs by 90%, and BT disposed 225 tons of equipment (in an ecologically friendly manner) and closed several data centers across the UK. This saving is even more remarkable because it involved only Wintel servers—BT also has a large population of Unix servers that were not factored into the savings.

Not only did BT achieve huge operating savings, partly by avoiding the need to build a new data center at an estimated cost of $120 million, it also received three top European ecology awards for the reductions of its carbon footprint from the program. During this process, BT continued to grow its business steadily, which puts the lie to the common argument that companies and nations have the choice of only expanding their economies or cutting their environmental impacts. Far from hurting business, BT’s project added credibility to the green business consulting practice it launched earlier this decade in both in the European Union and United States.

This is not an extreme example of what can be accomplished. Clearly BT is a very large enterprise. Smaller companies will achieve proportionately smaller savings in absolute numbers, but the percentages will hold up for organizations with server populations of 50 or more. This will allow them to extend the effective lives of their data centers as well as saving on energy costs. Even smaller organizations may find benefits to virtualization, particularly when facing the need to upgrade their servers. One large server is less expensive to buy, operate and maintain than even a small population of small servers. The application management automation that virtualization provides can save a smaller organization one, or more full time equivalent IT employees.

Application Virtualization

These savings are being achieved mostly through hypervisor virtualization as supplied by VMWare, Hyper-V, and other vendors. This technology requires each virtual host to run its own copy of the operating system. This is the preferred solution when applications running different OSs—for instance a mixed population of Windows, Unix and Linux or several different Unix flavors—are being virtualized onto a single physical server. However, this has efficiency implications when many applications on the same OS are virtualized.

An emerging new approach, “application virtualization,” offers a more efficient solution in these cases, allowing multiple virtualized applications to share a single copy of an OS. Not only can this allow more applications to run on a given physical server, its proponents claim that it also will allow efficient operation of high compute-load applications which are not good candidates for hypervisor virtualization.

Unified Monitoring and Virtualization

At first glance it might seem that virtualization by itself would have little impact on unified monitoring. The same applications are running in the same data center, just on a different piece of hardware. However, a closer look shows that it does have unified monitoring implications. The extent of those implications varies, depending on the pre-virtualization architecture. Overall virtualization is one of several forces combining to drive the network into the center of the IT infrastructure and displacing the old architecture of 40 physical servers in a rack. This increases the importance of network operations and the potential impact of interruptions, making strong unified monitoring vital to today’s business.

How Virtualization Works

Concentration of Applications

First, virtualization centralizes applications on one or comparatively few physical servers, making those, and their “last mile” network connections, single-points-of-failure that can have a major impact on business operations when they fail. Those who remember the green-screen operations of the 1980s know that when the central computer failed, all work stopped in the office. The failure of a network switch or router in a highly virtualized environment could have a similar impact by shutting off access to as many as 50-100 vital applications. A unified monitoring tool such as Paessler’s PRTG Network Monitor can provide instant notification of any kind failure on the virtualization host, guest, and that guest’s applications running on it. This allows IT to take immediate action to minimize the impact of the failure.

Storage Solutions and Virtualization

Part of the promise of server virtualization is the ability to share and shift resources, including disk space, from one application to another dynamically. In a multi-server environment, this mandates the migration from direct, attached storage (in which the disk drives are attached directly to the server much as they are on desktop and laptop computers) to separate storage systems running on a storage area network (SAN), which becomes part of the overall network infrastructure. This migration has been ongoing since the late 1990s and has several advantages in terms of flexibility and data protection. It will continue to accelerate as server virtualization works its way into the last remaining bastions of datacenters that still use direct attached storage for production servers.

The disadvantage is that all access between applications and data now must run over a network, and even small delays can create issues with many applications. Thus, virtualized environments cannot tolerate network overloads or switch failures. This requires strong network management. A strong unified monitoring tool such as Paessler’s PRTG Network Monitor can provide real-time graphic monitoring of traffic at critical points in the network and can provide long-term usage projections. This helps network managers anticipate traffic increases before they impact service levels. You can monitor your storage networks separately from your production networks and track the impact they have on one another. This will give you the information you need when looking to upgrade critical infrastructure involved in connecting the two networks.

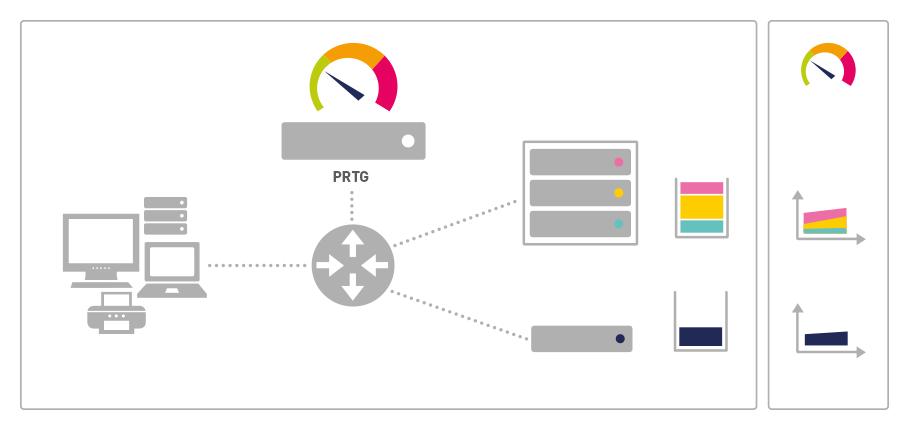

FIGURE: Three applications run

in a virtual environment

sharing the resources of

the virtualization server

Unsuitable Applications for Virtualization

There will always be applications that require resources that are best delivered by a dedicated host. Even with PRTG, we recommend that you use a dedicated host for applications over 5,000 sensors. However, the list of applications not suitable is always decreasing as the technology continues to improve. Identifying which applications should remain on dedicated servers, is not always easy. The volume and character of transmissions to and from these applications provides major clues toward identifying the applications, and a strong unified monitoring tool, such as PRTG Network Monitor, is the best source for that vital information. Whether it is intense CPU usage or queueing, high disk I/O demands, or compliance requirements, PRTG can help you identify these unique requirements. Allowing your purchasing to be more strategic for the applications which need a dedicated host.

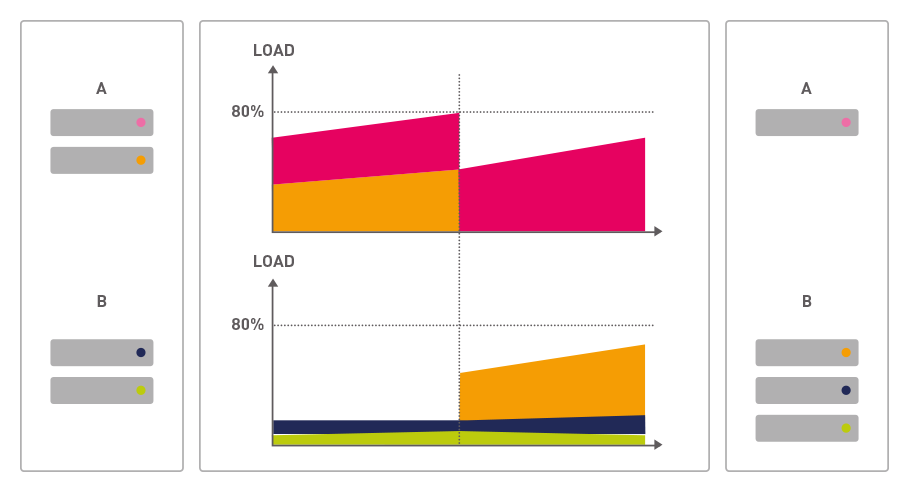

Virtualizations and Resource Management

Server virtualization promises dynamic reassignment of underlying compute, memory, and storage resources to meet spikes in demand on individual applications. This is the real key to increasing efficiency in resource allocation. One major reason that the unvirtualized environment is characterized by such low utilization is that each application needs sufficient computer power, memory and storage to meet its maximum load plus an overhead to accommodate future growth. It is often difficult to predict the speed of growth, which tends to follow a “hockey stick” graph rather than a smooth curve—usage is low while users learn a new application and then may jump quickly as they become comfortable with it and realize its potential for improving their work. Often many applications experience major cyclical variations in demand that may be weekly, monthly or annual. Financials are the obvious example of applications with very large monthly and quarterly use cycles, but many others exist.

FIGURE: With increasing user acceptance

a new application (red) may

require more resources. This

can easily be achieved at tx

by moving one application

(blue) to another virtual mashine

Again, in the unvirtualized environment, each application must have enough computing resources to deliver promised service levels under the maximum annual load. For some applications, this means a heavy investment in computing resources that will remain idle much of the time. Virtualization removes this problem by providing dynamic reallocation of computer resources. Demand on the separate applications running in a data center can operate on different cycles. An application experiencing a surge in demand at a given time can “borrow” resources from another that is in a low period of its demand cycle. In the extreme case, virtualization vendors are promising the capability to dynamically move an entire application or guest across the network from one server to another in order to respond to such variations. Ultimately, this implies that all the servers and storage devices running virtualized environments can, for planning purposes, be treated as one large machine. Their resources be lumped together to meet the total demand of all virtualized software running on them.

As a practical matter, this may only work inside a single data center since transmission delays between centers could introduce performance issues. And, it will not work at all unless the network connecting these physical servers is fast and highly fault tolerant. This requires high levels of network management to guarantee performance.